DDoS: endless waves of space invaders targeting your website

dordy @ openclipart.org - CC0 1.0 Universal

Early in the morning on Saturday 19. August I received an innocent-looking email from my hosting provider. Apparently, my legacy CentOS 7 VPS was under heavy load. This is still home to an Internet forum and a few other sites (until I'm ready to move them over to FreeBSD). Anyway, I thought that was a bit odd. What could be causing the heavy load? Maybe it was some web crawler gone mad? It seemed the most plausible explanation. I was reading this on my mobile phone while I was on a family outing, so I didn't have time or tools at hand to diagnose. However, I did try accessing the website, but it failed to load. Now I felt more worried!

Thankfully, once I was back home and reunited with my computer, I was able to

login via ssh without issue. It quickly became clear that the forum was being

hit with waves of extremely high traffic, each wave lasting around 3-4 minutes,

then subsiding for a minute or two before it hit again. The IP addresses

involved were from around the globe: South Korea, Finland, Indonesia,

Switzerland, Russia, USA, to name a few. Some of these were Tor exit nodes, but

most seemed to be just regular IP addresses. In other words, what you would

expect from a Distributed Denial of Service attack.1

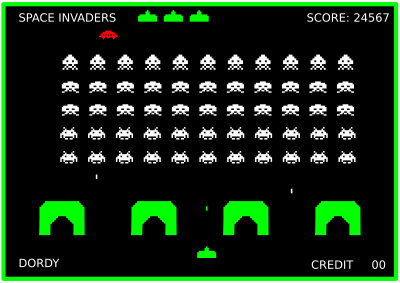

DDoS attack: multiple infected computers controlled by the attacker

Cisco - CC BY-SA 4.0

I had no prior experience with this sort of thing, but I stubbornly decided I was going to do something about it!

Fail2Ban to the rescue

So, I turned to Fail2Ban, since that is a tool I know and have been working with recently2 (although that was on my FreeBSD VPS).

Well, I already had a Fail2Ban sshd jail enabled, as well as various jails for

my mail services, but I had nothing for web traffic. So, I quickly found a few

NGINX jails. The most relevant one seemed to be the nginx-limit-req jail which

is based on the NGINX ngx_http_limit_req_module3 that can be used to limit

the request processing rate per IP address. Eventually, I went with this

configuration:

http {

...

limit_req_zone $binary_remote_addr zone=one:10m rate=1r/s;

...

location / {

limit_req zone=one burst=5 nodelay;

...

}

...

}

After making this change and tweaking the jail a little (e.g. setting a really

long bantime and a very small maxretry), I noticed Fail2ban was behaving

somewhat slow and sluggish. It

turned out it was consuming A LOT of RAM, so I made the difficult decision

to shut down our Elasticsearch instance as I was afraid the OOM killer would

kill more important services.

This turned out to be a good decision. Thousands of IP addresses were banned by

Fail2ban (as was evident with a command such as

fail2ban-client status nginx-limit-req) and therefore became blocked at the

firewall level, no longer able to create any load on the system.

Blocking IP ranges with iptables

For good measure, I also used netstat -ntu commands to identify the IP ranges

utilised the most heavily by the attackers, and then blocked these manually

directly in the firewall using the iptables command:

# N.o. network connections from IP addresses in the /16 range, sorted

netstat -ntu|awk '{print $5}'|cut -d: -f1 -s |cut -f1,2 -d'.'|sed 's/$/.0.0/'|sort|uniq -c|sort -nk1 -r

# N.o. network connections from IP addresses in the /24 range, sorted

netstat -ntu|awk '{print $5}'|cut -d: -f1 -s |cut -f1,2,3 -d'.'|sed 's/$/.0/'|sort|uniq -c|sort -nk1 -r

# Example command to block an IP address range

iptables -A INPUT -s 123.45.67.0/24 -j DROP

The forum eventually became functional again despite continuing attack waves. At around 7pm the attack started to tail off, and at 10pm it had come to an end. Victory!

Finishing up and further work

One remaining issue was that the fail2ban-server process had somehow

ballooned to 1.3G of RAM usage. (This feels like a bug!) After waiting until

the ban list had shrunk back down to a more normal number of IP addresses, I

restarted the fail2ban service, and finally the fail2ban-server RAM

consumption was back at an acceptable level. This allowed me to start up the

Elasticsearch instance again, and with that normal service was resumed - almost!

The final issue was that I had configured the ngx_http_limit_req_module a bit

too coarse-grained for the forum. A few legitimate users complained they were

getting blocked, and I even got myself blocked a couple of times while

investigating the issue. To remedy this, I have attempted to tweak the

configuration further, but I have not yet arrived at a 100% satisfactory

solution.

-

Denial-of-service attack (wikipedia.org) ↩︎

-

The ngx_http_limit_req_module module (nginx.org) ↩︎